Now Reading: Quack AI Governance: Navigating the Pitfalls of AI Regulation

-

01

Quack AI Governance: Navigating the Pitfalls of AI Regulation

Quack AI Governance: Navigating the Pitfalls of AI Regulation

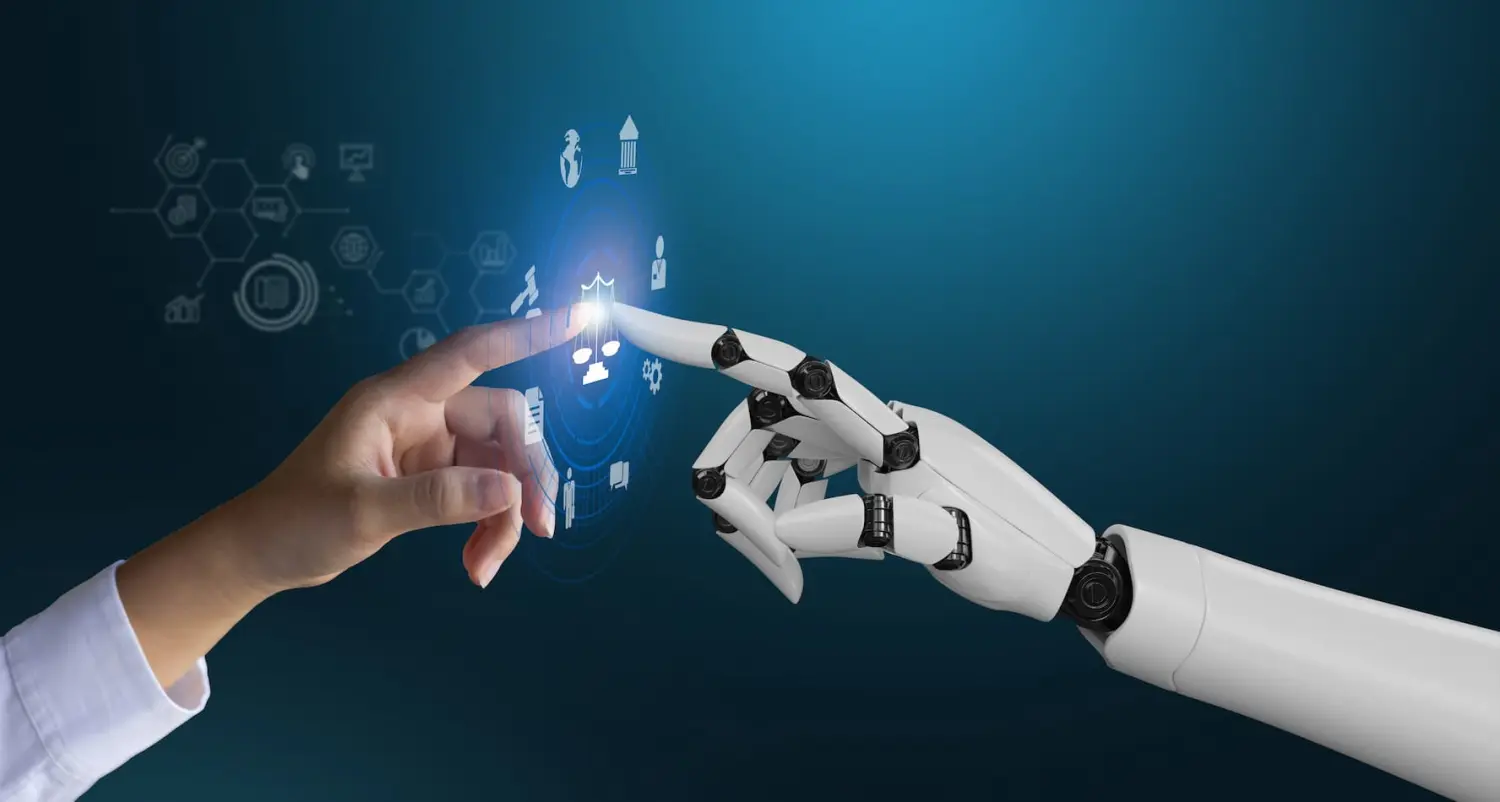

Artificial Intelligence is rapidly changing our world, from how we shop to how we receive medical advice. As this technology becomes more powerful, the conversation around how to manage it grows louder. This is where AI governance comes in—the rules and frameworks designed to ensure AI is used safely, ethically, and responsibly. However, not all governance is created equal. The rise of quack AI governance presents a significant challenge. This term refers to policies and practices that look good on the surface but lack real substance, effectiveness, or understanding of the technology they claim to control.

This article will dive into the world of quack AI governance. We’ll explore what it is, how to spot it, and why it’s so dangerous. Understanding the difference between genuine, robust governance and hollow promises is crucial for anyone interested in the future of technology.

Key Takeaways

- What is Quack AI Governance?: It’s a set of rules or principles for AI that appear legitimate but are ineffective, misleading, or unenforceable in practice.

- Why It’s Dangerous: It creates a false sense of security, allows harmful AI practices to continue unchecked, and erodes public trust in both technology and regulation.

- How to Identify It: Look for vague language, a lack of clear enforcement mechanisms, and policies created without input from technical experts or diverse communities.

- The Path Forward: Effective AI governance requires collaboration between policymakers, tech experts, ethicists, and the public to create clear, adaptable, and enforceable standards.

Understanding the Core Concept: What is AI Governance?

Before we can tackle the “quack” part, let’s establish a clear understanding of what real AI governance is. At its heart, AI governance is the comprehensive framework of rules, practices, and processes that an organization or society uses to direct and control the development and deployment of artificial intelligence. The primary goal is to maximize the benefits of AI while minimizing its potential risks.

Effective AI governance addresses several key areas:

- Ethics: Ensuring AI systems align with human values, fairness, and rights.

- Accountability: Defining who is responsible when an AI system makes a mistake or causes harm.

- Transparency: Making it possible to understand how an AI system makes its decisions.

- Safety and Security: Protecting AI systems from malicious attacks and ensuring they operate as intended without causing unintended harm.

Think of it like the rules of the road for cars. We have speed limits, traffic lights, and driver’s licenses to ensure everyone can use the roads safely. Similarly, AI governance provides the necessary structure to guide AI’s journey into our society, ensuring it serves humanity’s best interests.

Defining “Quack AI Governance”

Now, let’s introduce the problem: quack AI governance. This term describes any framework, policy, or statement about AI oversight that is fundamentally flawed, deceptive, or empty. It’s the equivalent of putting up a “Beware of Dog” sign when you don’t own a dog—it gives the illusion of security without providing any. This type of governance often prioritizes public relations over actual risk management.

A company might publish a beautiful, ten-page document titled “Our Commitment to Ethical AI,” filled with inspiring words but containing no concrete actions, no one in charge of enforcement, and no penalties for violations. That’s a classic example of quack AI governance. It’s designed to appease regulators and the public without requiring the company to change its profitable but potentially risky practices. It’s a performance of responsibility, not the practice of it.

The Dangers of Ineffective AI Oversight

Why is this such a big deal? The dangers of quack AI governance are significant and far-reaching. It’s not just about a company looking bad; it has real-world consequences for individuals and society as a whole.

Firstly, it creates a false sense of security. When the public, investors, and even employees believe that strong ethical guidelines are in place, they are less likely to question the company’s AI applications. This complacency allows potentially harmful systems—like biased hiring algorithms or flawed facial recognition software—to operate without scrutiny. People might trust an AI’s decision because they assume it’s governed by strict rules, when in reality, those rules are meaningless. This misplaced trust can lead to discriminatory outcomes, financial loss, or even physical harm.

Secondly, quack AI governance allows bad actors to thrive. It provides cover for organizations that want to exploit AI for profit or control, without regard for the ethical implications. They can point to their “governance” documents as proof of their good intentions, all while their algorithms perpetuate societal biases or violate privacy. It undermines the efforts of companies that are genuinely trying to implement robust and meaningful governance, as it becomes harder to tell the difference between the two.

How to Spot Quack AI Governance in the Wild

Learning to identify quack AI governance is a critical skill for consumers, investors, and policymakers alike. It often hides in plain sight, dressed up in corporate jargon and lofty promises. Here are some key red flags to watch out for.

Vague and Ambiguous Language

One of the most common signs of hollow governance is the use of vague, feel-good terms that are never clearly defined. Phrases like “we are committed to fairness,” “we strive for transparency,” or “we aim to be ethical” sound great but mean very little without specifics.

- What to look for: A lack of concrete definitions. What does “fairness” mean in the context of your algorithm? Is it equality of outcome? Equality of opportunity?

- A better alternative: “Our hiring algorithm is regularly audited to ensure that job ad delivery rates for candidates over 40 are no more than 5% lower than for candidates under 40.” This is specific, measurable, and auditable.

Lack of Clear Accountability

If an AI governance policy doesn’t clearly state who is responsible for its implementation and enforcement, it’s likely just for show. A policy without an owner is an orphan; no one will take care of it.

- What to look for: No mention of a specific role (like a Chief Ethics Officer), committee, or department tasked with oversight. The policy might also lack a clear process for reporting violations or concerns.

- A better alternative: “Our AI Ethics Committee, chaired by the Chief Technology Officer, will review all high-risk AI projects quarterly. Employees can report concerns anonymously through our dedicated ethics hotline, and all reports will be investigated within 30 days.”

No Mention of Enforcement or Consequences

A rule without a consequence for breaking it is just a suggestion. A key indicator of quack AI governance is the complete absence of any mention of what happens when the principles are violated.

- What to look for: The policy details what should happen but is silent on what happens when it doesn’t. There are no stated penalties, remediation processes, or commitments to halt projects that fail an ethics review.

- A better alternative: “AI projects found to be in breach of our fairness principles will be immediately suspended. The project team must present a remediation plan to the AI Ethics Committee before development can resume. Deliberate violations may result in disciplinary action.”

The “Ethics Washing” Phenomenon

“Ethics washing” is a term that perfectly captures the intent behind much of quack AI governance. It’s a spin on “greenwashing,” where companies pretend to be more environmentally friendly than they really are. In this case, companies use the language of ethics to mask irresponsible or harmful AI development. They might sponsor an ethics conference, publish a glossy report, or partner with a university to create an “AI Ethics Lab”—all while continuing to deploy biased algorithms internally. It’s a public relations strategy designed to deflect criticism and regulation. This approach is particularly damaging because it poisons the well of genuine ethical inquiry, making the public cynical about all corporate ethics efforts.

Real-World Examples vs. Ideal Scenarios

To make the concept clearer, let’s compare what quack AI governance looks like in practice versus what a robust, effective framework would entail.

|

Feature |

Quack AI Governance (The Bad) |

Robust AI Governance (The Good) |

|---|---|---|

|

Policy Language |

Uses vague terms like “promote fairness” and “encourage transparency.” |

Defines “fairness” with specific metrics (e.g., demographic parity) and sets clear transparency standards (e.g., model cards for all systems). |

|

Accountability |

States “the company” is committed to ethics, with no individual or group named as responsible. |

Appoints a cross-functional AI Ethics Board with veto power over high-risk projects. The board’s members and decisions are documented. |

|

Enforcement |

No mention of consequences for violating the policy. |

Clearly states that projects failing an ethics audit will be halted. Includes a process for remediation and potential disciplinary action. |

|

Expert Input |

The policy is written solely by the legal and marketing departments. |

The framework is co-developed with technical experts, ethicists, social scientists, and representatives from affected communities. |

|

Lifecycle |

A “set it and forget it” document that is published once and never updated. |

A living document that is reviewed and updated annually based on new technological developments, incidents, and societal feedback. |

This table shows the stark difference between a performative policy and a functional one. One is a shield, and the other is a compass.

The Role of Government and Regulation

While companies have a responsibility to self-regulate, governments play an indispensable role in preventing the spread of quack AI governance. Without legal and regulatory backstops, there is little incentive for some organizations to move beyond mere lip service. Effective government action can set a floor for responsible AI development that all companies must adhere to.

However, governments themselves are not immune to creating their own form of quack AI governance. A law that is too broad, lacks technical understanding, or is unenforceable can be just as problematic as a weak corporate policy. For instance, a regulation that simply says “AI must be fair” without defining fairness or providing a way to measure it is not helpful.

To be effective, AI legislation must be:

- Specific and Clear: It should target specific, high-risk applications of AI (like in justice, employment, and healthcare) with clear requirements.

- Adaptable: Technology moves fast. Regulations need built-in mechanisms for review and updates to keep pace with innovation.

- Collaborative: The best laws are created with input from a wide range of stakeholders, including industry experts, academics, civil rights groups, and the public. As some analysts at Forbes Planet point out, multi-stakeholder collaboration is key to building resilient frameworks.

Building a Culture of Genuine AI Governance

Moving beyond quack AI governance requires more than just better policies; it requires a cultural shift within organizations. A document sitting on a server does nothing. True governance is embedded in the daily practices of developers, product managers, and executives.

From Principles to Practice

The most important step is translating high-level principles into concrete actions. If a principle is “human-centric,” what does that mean for the user interface designer? It might mean building in an “undo” button or ensuring a human can always override the AI’s recommendation.

Fostering Internal Education

Engineers and data scientists need to be trained not just in how to build AI, but in how to think about its societal impact. Regular workshops on topics like bias, fairness, and transparency can help build a shared language and understanding of ethical responsibilities across the organization. This empowers employees at all levels to spot potential issues early in the development process, long before a product reaches the public.

The Importance of Diversity in Tech Teams

A homogenous team is more likely to have blind spots that can lead to biased or harmful AI. A team with diverse backgrounds—in terms of race, gender, socioeconomic status, and disability—is better equipped to anticipate how an AI system might impact different communities. This diversity is not just a social good; it is a crucial component of risk management and robust governance.

Conclusion: Demanding Authenticity in AI Oversight

The rise of artificial intelligence offers incredible promise, but it also comes with significant risks. The spread of quack AI governance is one of the most subtle yet severe threats to realizing AI’s potential for good. It creates a dangerous illusion of safety, erodes public trust, and allows irresponsible innovation to proceed unchecked.

As individuals, employees, and citizens, we must become more discerning. We need to look past the buzzwords and demand evidence of genuine commitment to ethical AI. This means asking hard questions: Who is accountable? What are the consequences for failure? How are you ensuring your system is fair and transparent?

Defeating quack AI governance requires a collective effort. Companies must move from performance to practice, embedding ethics into their culture. Governments must create smart, adaptable regulations that set clear and enforceable standards. And all of us must learn to distinguish authentic, robust oversight from the hollow promises designed to placate us. The future of trustworthy AI depends on it.

Frequently Asked Questions (FAQ)

Q1: Is all corporate AI ethics talk just “quack AI governance”?

Not at all. Many companies are making genuine, significant investments in building robust AI governance frameworks. The key is to look for the signs of authenticity: specificity, clear lines of accountability, enforcement mechanisms, and input from diverse experts.

Q2: What’s the single biggest red flag for quack AI governance?

The lack of a clear enforcement mechanism is arguably the biggest giveaway. If a policy outlines a set of rules but is silent on what happens when someone breaks them, it’s very likely a performative document with no real power.

Q3: Can a small company afford to have robust AI governance?

Yes. Robust governance isn’t about spending millions on a huge compliance department. It’s about building a culture of responsibility. This can start with simple, low-cost steps like creating a checklist for ethical considerations before starting a new project, holding regular team discussions about AI impact, and being transparent with users about how AI is being used.

Q4: How is “quack AI governance” different from just having a bad policy?

The difference often lies in intent. A bad policy might be the result of incompetence or a lack of resources. Quack AI governance is often a deliberate act of “ethics washing”—creating a policy with the specific intention of misleading the public and regulators into believing the organization is more responsible than it actually is.

Q5: What can I do as a consumer to fight against this?

Be a critical consumer of technology. Ask questions about the products you use. Support companies that are transparent about their AI practices and data usage. Voice your concerns when you see AI being used in ways that seem unfair or irresponsible. Public pressure is a powerful tool for encouraging companies to move beyond quack AI governance.